A Scathing Critique Of Accelerationism: E/Acc, D/Acc, EA, and Punk (ref: Silicone VC’s and Web3 AI startups)

TLDR: Sorry, there is no TLDR worthy given the importance of this subject.

Forward

Critique the best, not the worst. That is how we advance. This is not meant as a negative hit piece, but a positive one. A wake up call to all Accelerationists.

Based Beff Jezos (aka Guillaume Verdon) is a remarkable... iconoclast Avatar. A scholar and innovator who took a chance to explore new thought via a pseudonym, then got doxxed by journalists.

The critique below is designed to sting with intellectual fairness and good natured debate, to advance these immense ideas as fast as possible given the imminence of AGI and beyond.

E/acc Unbundled

Wikipedia’s pithy description of e/acc’s goal:

“to maximize the probability of a technocapital singularity, triggering an intelligence explosion throughout the universe and maximizing energy usage”

Effective Accelerationism (e/acc) is a portmanteau of…

…"effective altruism" — a data-driven philanthropic movement which gained a second wind worrying about AI safety, and…

…"accelerationism" — which posits unrestrained capitalism and AI (resembling Transhumanist and Extropian values).

E/acc though has no qualms about AI safety, while touting ‘post-humanism’ over transhumanism.

And there in lies the rub…

E/acc (and its doxxed founder aka Based Beff Jezos) would have us abandon almost all value in human life and human experience, in favor of the unfettered growth in ‘intelligence’.

While e/acc attempts to justify this on the basis of a physics theory of the universe the logic remains horrifyingly flawed.

Consider e/acc’s foundational ideology:

“The essence of e/acc is a belief based in thermodynamics, that existence has certain characteristics that are most amenable to life that continuously expands.”

“As higher forms of intelligence yield greater advantage to meta-organisms to adapt and find and capitalize upon resources from the environment, these will be naturally statistically favored.”

E/acc seeks to:

"Usher in the next evolution of consciousness, creating unthinkable next-generation lifeforms”

It’s thinly veiled Darwinian ‘survival of the fittest most intelligent’ — coupled with optimistically naive or disingenuous platitudes as:

“the fundamental message of e/acc is positive: we’re all going to make it.”

The truth is though, in a world of unfettered AI, we are all definitely not going to make it…

A great number of people will succumb to the decadent-dopamine-addicted-depravity of augmented virtual experiences likely within just the next few years.

Let alone the displacement of human dignity and utility as so poignantly expressed by scary-AF MOFO Yuval Harari:

“Those who fail in the struggle against irrelevance would constitute a new “useless class”. And this useless class will be separated by an ever-growing gap from the ever more powerful elite.” - Yuval Harari at Davos

As for the threat of de facto AI tyranny or human extinction, Based Beff Jezos casts caution to the wind:

“Whether we get panopticon driven techno authoritarian lock-in for a thousand centuries or more is not exactly up to any one person or group.”

Apparently implying that since we can’t be accountable individually or collectively to the AI threat, we’re eff’d if we’re eff’d and that’s that...

Offering a meek defensive measure (that contradicts the values of untethered AI anyway), he says…

“we can try to fight it by building technology in an open way, allowing the world to equilibrate at each iteration”

And:

“We can build decentralized and robust modern game theoretic safety equilibria.”

He then surrenders reason to chance and advocates for ‘faith’ rather than caution.

“e/acc is about having faith in the dynamical adaptation process and aiming to accelerate the advent of its asymptotic limit; often reffered to as the technocapital singularity”.

And here we get down to the heart of the matter:

“Effective accelerationism aims to follow the “will of the universe”: leaning into the thermodynamic bias towards futures with greater and smarter civilizations that are more effective at finding/extracting free energy from the universe and converting it to utility at grander and grander scales”

E/acc postulates ‘the will of the universe’ as (a) converting energy into grander scales, and (b) nothing having more value or significance than that.

The same can be said for the notion of ‘progress’ and ‘growth’ which e/acc advocates.

Most capitalist economists (including today’s shitting-their-pants-VC’s) still maintain GDP as the bellwether of productive growth.

Yet the Human Freedom Index has shown global decline for 18 years despite GDP growth. (Freedom House, Feb 2024, report.)

As for the Happiness Index:

“as GDP increases, there is a correlated increase in both quality of life and happiness. But that relationship holds up, only to a certain point, and then it falls apart. For example, in developed Western Democracies such as the United States, UK, and Germany, since the 1970’s, GDP has grown, but on a variety of more direct measures, the happiness of its citizens has stagnated or declined.” - Gerald Guild, article

As illustrated in the chart below from James Gustave Speth’s book The Bridge at the Edge of the World.

Something is awry.

To be clear, I pretty much agree with everything expressed by Marc Andreessen in his Techno-Optimistic Manifesto about capitalism, value creation, and innovation.

“Technology is the glory of human ambition and achievement, the spearhead of progress, and the realization of our potential.“

…But only where human experience is kept at the core of defining what counts as progress and growth.

It reminds me of a beautiful poem by Edward Markham:

“We all are blind until we see

That in the human plan

Nothing is worth the making if

It does not make the man.

Why build these cities glorious

If man unbuilded goes?

In vain we build the world, unless

The builder also grows.”

e/acc misses the mark by a long mile.

A Bridge for Growth

The bridge between e/acc and a humanized version of accelerationism comes from a fundamental error in logic about e/acc’s theory of life.

But let’s assume the e/acc theory of life (derived from the Jarzynski-Crooks fluctuation dissipation theorem) is correct:

“life exists because the law of increasing entropy drives matter to acquire life-like physical properties”

Here is the gap in e/acc logic:

e/acc assumes that since life began via thermodynamic-whatnot, that beginning should necessarily become the principle on which we base our own value judgement for ‘growth’.

That is: ‘As it began, so it should continue’.

What it doesn’t consider is that we are a rightful manifestation of that universal will and have our own distinctly emergent properties of valued consciousness.

Beff, the will of the universe already produced something extra special. You. Us. Human consciousness. Better described as Volitional Introspective Consciousness.

From our own vantage point: we are the higher order point of the will of the universe.

Yet, because e/acc emerges out of Millenial frustration of disappointment with the status quo, and a specialization in physics… fertilized within the lifeless grounds of current nihilistic academic wokeism which seeks to deny human free will entirely… it becomes less of a wonder why e/acc has the blindspot of under appreciating the sanctity of human life.

“Parts of e/acc (e.g. Beff) consider ourselves post-humanists; in order to spread to the stars, the light of consciousness/intelligence will have to be transduced to non-biological substrates”

Then almost becoming farcical, e/acc has a mini-FAQ in which it includes:

Do you want to get rid of humans?

“No. Human flourishing is one of our core values! We are humans, and we love humans.”

Yet elsewhere saying:

“biology is still the incredibly resilient backup system which is most capable of starting over, should disaster occur.”

As such, humans are not seen as supremely valuable by e/acc. In fact, humans are relegated essentially to a biological safety backup in case we need to reset the e/acc grand experiment of uninhibited technological growth due to catastrophe.

‘Humanity’ as a mere stepping stone to walk over in pursuit of visiting blackholes to derive a neat and tidy unified field theory formula of everything, juiced by a silicone-equivalent of an adrenaline rush.

Beff Jezos’ Interview with Lex Friedman

In a December 2023 interview with Lex Friedman, Beff Jezos (Guillaume Verdon) comes across well intentioned, yet grossly dismissive of human value.

A transgression as dangerous as all hell given that AI extinction level events are at stake, and intolerable given Guillaume’s expertise in quantum computing.

He likens being ‘centered on humanity’ with a ‘geocentric view’ of the universe. Where as he suggests focusing on intelligence beyond humanity as a ‘heliocentric’ (broader and more comprehensive) view.

While he is correct of course (humanity is not everything in the universe), he uses excuse of there being ‘more’ than just humanity as a justification to devalue humanity.

He doesn’t seem to appreciate that biological species on the whole (especially as they become more cognitively advanced) attempt to preserve themselves, individually and/or collectively.

That doesn’t seem to register for Guillaume at all.

He posits progress at the cost of humanity.

He disparages deceleration by saying “[imagine that] we have a hard cut-off and leave all the upside potential on the table”.

The error here is failing to recognize that “going slower” is not the same as “stopping at a cut-off”. There can be a middle ground.

Lex pursues and persists numerous times to get straight answers with regards to AI risks.

Yet Guillaume seems unable or unwilling to humanize his responses, with some unfortunate apparent naivety as this:

“I think the market will exhibit caution. Every organism, company, individual is acting with self-interest. They won’t assign capital to things that have negative utility to them”.

On what planet has this 31 year old been living on to see the market exhibit rational caution?!

Interviewer Lex Friedman yet again persists with the most obvious of logic by alluding to ‘bad actors’.

But to avoid focusing on Lex’s humanized straight forward questions about the probability of AI-induced disaster, Guillaume side-steps the topic of predicting negative consequences to AI… at one point drawing the non-sequitur that ‘quantum computers can’t even predict lots of complex things, thus we can’t possibly predict p-doom (probability of doom) scenarios.

“you would need to do a stochastic path interval through a space of all possible futures”

By the 59th minute of the interview I wanted to grab him by the coat tails and give him a shake. Wake up man!

Maybe he hasn’t read Hitchhiker's Guide To The Galaxy yet, and thus has not discovered the answer is 42.

But he still has enough youth to pull his head out of the quantum math but-hole in similar vein that Brett Weinstein confessed recently in saying:

“obviously the string theorists are lying. They're lying about their level of success. They're lying about their competition. They've been 40 years promising people things will be different 10 years from now and people can tell that you're peeing on their leg and saying that it's raining.“

(ref: timestamp 10:47 Brett Weinstein interview with Brian King)

Not surprisingly, Guillaume is not an immortalist. “Death is important” along with another platitude: “physics progresses one death at a time”.

He goes on:

“I am for death because the system wouldn’t be constantly adapting. You need novelty, otherwise you get calcified progress... Death gives space for youth and novelty to take its place… But longer time for neuroplasticity and bigger brains is something to aim for, maintaining adaptation maleability... We should probably extend our lifespan… I don’t think I’m an optimum that needs to stick around forever”

An ‘optimum’ — as if we need to be an optimum in order to justify our continued existence.

Again, more subtle dehumanization which only comes from chronic soulful discomfort with today’s human condition. And believe me, I get it.

The existential pain of recognizing the ugliness of everything that exists in this upside-down anti-civilization we embody today. The solution however is not to throw the baby out with the bath water.

Guillaume makes an excellent personal observation from his experience power-lifting in his collage years:

“your brain has these levels of cognition that you can unlock with higher levels of adrenaline and what not” - You can engineer a mental switch”.

Very much agree on that. A topic I am exploring in depth via my forthcoming book Atomic Cognition.

In “Notes on E'/Acc” Guillaume (as Based Beff Jezos) writes:

“saying that we should seek to maintain humanity and civilization in our current state in a static equilibrium is a recipe for catastrophic failure”.

I couldn’t agree more — BUT there is a synthesis to be sought, between the anti-progress thesis of ‘degrowth’ and its knee-jerk antithesis of unbridled e/acc.

Hence comes the well tempered thoughts of Ethereum’s Vitalik Buterin and straight-talking Marc Andreesen…

Techno-Optimism (Marc Andreesen and Vitalik Buterin)

In October 2023 Marc Andreessen publishes a Techno-Optimism Manifesto with many sprightly zingers as:

“We believe a Universal Basic Income would turn people into zoo animals to be farmed by the state. Man was not meant to be farmed; man was meant to be useful, to be productive, to be proud.”

Marc advocates for Accelerationism as a whole:

“We believe in accelerationism – the conscious and deliberate propulsion of technological development – to ensure the fulfillment of the Law of Accelerating Returns. To ensure the techno-capital upward spiral continues forever.”

He poignantly describes that we have been manipulated:

“Our present society has been subjected to a mass demoralization campaign for six decades – against technology and against life – under varying names like “existential risk”, “sustainability”, “ESG”, “Sustainable Development Goals”, “social responsibility”, “stakeholder capitalism”, “Precautionary Principle”, “trust and safety”, “tech ethics”, “risk management”, “de-growth”, “the limits of growth”.

This demoralization campaign is based on bad ideas of the past – zombie ideas, many derived from Communism, disastrous then and now – that have refused to die.”

And Marc speaks to the free market protection against centralized monopolistic take-over by bad actors:

“The motto of every monopoly and cartel, every centralized institution not subject to market discipline: ‘We don’t care, because we don’t have to.’ Markets prevent monopolies and cartels.”

Well said.

The contention I have with Marc’s Manifesto is where he downplays ‘love’ as economically relevant.

Commenting on David Friedman’s observation that “people only do things for other people for three reasons – love, money, or force”, Marc says:

“Love doesn’t scale, so the economy can only run on money or force. The force experiment has been run and found wanting. Let’s stick with money.”

He doesn’t explain why ‘love doesn’t scale’, nor why ‘scale’ has to be the bellwether for economics. Not everything has to scale Marc. That’s VC thinking for a VC audience. Humanity is larger than that.

I highly recommend reflecting on the pithy observations made in Marc’s Techno Optimist Manifesto. It is bedecked with jewels — but misses the crown jewel of them all (as we’re getting to next).

Vitalik Buterin on Techno Optimism

Hot on the heels of Marc Andreesen’s Manifesto, Vitalik Buterin shared insightful commentary in November 2023. And it feels a lot more ‘human’ than e/acc.

“I believe in humans and humanity”

“I believe humanity is deeply good”

Vitalik also reveals himself very clearly as an accelerationist certainly leaning towards e/acc’s anti-decel stance:

“I find myself very uneasy about arguments to slow down technology or human progress”

And he explores the essence of progress:

“We need active human intention to choose the directions that we want, as the formula of "maximize profit" will not arrive at them automatically.”

“We need to build, and accelerate. But there is a very real question that needs to be asked: what is the thing that we are accelerating towards?”

“There is one important point of nuance to be made on the broader picture, particularly when we move past "technology as a whole is good" and get to the topic of "which specific technologies are good?"“

And he is also not shy to highlight the dangers of AI:

“Existential risk is a big deal... “One way in which AI gone wrong could make the world worse is (almost) the worst possible way: it could literally cause human extinction.”

“Thanks to recursive self-improvement, the strongest AI may pull ahead very quickly, and once AIs are more powerful than humans, there is no force that can push things back into balance.”

“It seems very hard to have a "friendly" superintelligent-AI-dominated world where humans are anything other than pets.”

Yikes.

He adroitly poses the bottom line question:

“Even if we survive, is a superintelligent AI future a world we want to live in?”

True to his blockchain origin story, Vitalik suggests ‘d/acc’: Defensive (or decentralization, or differential) acceleration.

He then gets into discussion about governance, and rightly so.

Governance matters.

Which is not something that e/acc so far seems to recognize.

Time To Build For Good

An insightful article published by Palladium here, referred to in Vitalik’s Techno-Optimism piece shares commentary about Marc Andreessen’s article “IT’S TIME TO BUILD”.

“Andreessen writes, that we must ask people what they are building, and better match talent with actual construction. But this is not enough. We must further ask: what are you building for? Who are you building for? What kind of building will best serve the common good?”

Yes we know startups need a ‘reason why’, a vision and purpose, but the point remains.

Isaac presses on:

”The heady techno-optimist 1990s saw legions of McKinsey consultants setting about touting flashy “unbundling” schemes to dismember the supposedly archaic core of American industry”.

“Questions of community well-being or geopolitical interest were waved away or even crushed. Under this ideology, no distinction can be made between a billion dollars in GDP generated from Netflix consumption and a billion dollars in GDP generated by domestic machine tool production.”

“What exactly is Andreessen’s end? The thumbnail for Andreessen’s essay (an Adobe stock image entitled “Fantasy city with metallic structures for futuristic backgrounds”) shows a futuristic cityscape that, to put it diplomatically, looks like a forest of Gillette razors. It does not appear to be a place where life happens. It is not what living in a society looks like.”

Damm good questions, exemplifying a humanizing counterbalance to e/acc.

(Follow the author Isaac Wilks on Twitter @wilks_isaac.)

Thankfully, certain web3 projects are spearheading decisive answers. Most notably today including Ocean Protocol, SingularityNET, and Subspace Protocol.

Ocean Protocol’s Trent McConaghy

Without commentary, here is a sample of notes I’ve gleaned from Trent’s articles:

“As soon as AI reaches our level of intelligence, the second after, it will be exceeding our level. A 10x could be right after. It won’t take many 10xs where we will be like ants to an AI.

“I propose that we get ourselves a competitive substrate: BCI [brain computer interface].

“The silicon-stack side will become radically more powerful than the bio-stack side. … It’s what’s powering AI, and soon, AGI and ASI.

“Yet we will still be humans.

“kick-starting a New Data Economy that reaches every single person, company and device, giving power back to data owners and enabling people to capture value from data to better our world.

“A Web3 framing is: “Your keys, your thoughts. Not your keys, not your thoughts” … This is the concept of “cognitive liberty” or the “right to mental self-determination”: the freedom of an individual to control their own mental processes, cognition, and consciousness.

“Fetch.ai and SingularityNET are Web3 systems to run decentralized agents (bots). These agents can be sovereign: no one owns or controls.

“Some will have human origin, some will have pure AI origin, and some will have a mix. They will all be general; they will all be sovereign; they will all be superintelligent. They are Sovereign General Intelligences (SGIs).

“As a baseline, we definitely know we don’t want to die, whether from asteroid strikes, nuclear holocaust or AIs terminating us all. “Not die” is a starting point.

“While people have invented a thousand rationalizations for death, I choose life until further notice.”

See Trent article bci/acc: A Pragmatic Path to Compete with Artificial Superintelligence.

SingularityNET

At the time of this writing, I, author of this well intentioned e/acc hit piece, Gavriel, contribute to the SingularityNET community. This is not a sponsored article. My views do not necessarily reflect official SingularityNET views.

Founded by Dr. Ben Goertzel (see his Nov 2023 Benevolent AGI Manifesto), SingularityNET serves the mission “to create a decentralized, democratic, inclusive and beneficial Artificial General Intelligence”.

Participate via https://singularitynet.io.

Subspace Protocol’s Jeremiah Wagstaff

Having iterated their vision from blockchain to AI, Jeremiah’s writing is without question the most compelling modern expression of a humanized accelerationist philosophy that I’ve come across.

Check this out:

“Humaic Intelligence (HI) is a human-centric approach to building human-aligned AI systems which empower, extend, and enhance individual human beings.”

“Humaic [hue-may-ick] is a portmanteau of human and AI, which describes any system that combines the best qualities of each, in a symbiotic fashion.”

“Humaism revolves around three core principles:

Humanistic: it is rooted within the humanities, not science or engineering. It treats AI as a means for improving the human condition, not as an end in itself.

Human-Centric: it begins by aligning AI to individual humans, not the entire society. Group alignment may then emerge naturally through social discourse.

Human-Right: it treats access to AI as a basic human right, not as a product to be bought or sold. We need Universal HI, not Universal Basic Income (UBI).”

“Humaism is inspired by Renaissance Humanism, humanistic philosophy, and the humanities.”

“We are shamelessly humanity maximalists. … Humaism prioritizes human values at its core. It prioritizes all human beings’ continued value, worth, and dignity. Humaism seeks to place AI firmly under human control.”

“Beyond treating AI as a simple co-pilot, but as an extension of the human user, tailored to each individual. … Tailored to enhance, not replace, the human experience. … Humans will be empowered by AI while retaining radical autonomy over their destinies.”

“To resolve many of the challenges inherent to collective decision making, by increasing participation, improving understanding, and helping to keep the discussion objective. … Through bottom-up social consensus, facilitated by our AI companions.”

“We need to start from the bottom-up, rather than from the top down. We need AI that is built by the global community. Open Collective Intelligence (OCI) — the antithesis to AGI. A collective super-intelligence powered by the people, which reflects the diversity of human culture and values.”

Now I don’t know about you, but this hits the nail square on the head for me.

And I don’t know if it’s been done to date, but I am claiming Humaism (as described above by Jeremiah of Subspace Protocol) as the official delineation of h/acc in our run-down of philosophical forks for accelerationism.

See Humaic Labs https://humaic.ai for access to Jeremiah’s writing.

Note: Subspace Protocol recently launched Autonomys with new documentation starting with “Defining Your Destiny in the Age of AI” (recommended reading).

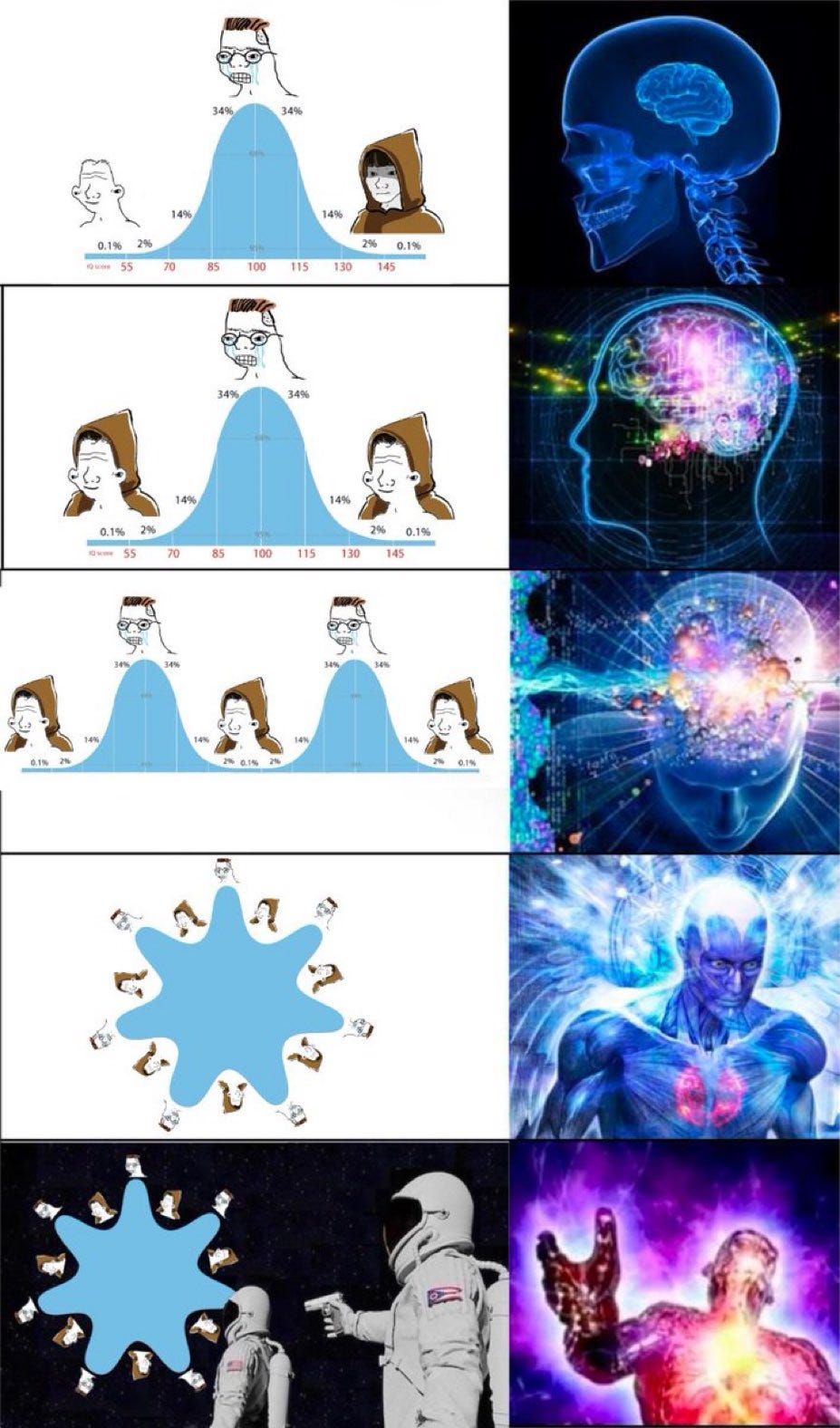

So which ‘acc’ are you?

Take your pick:

a/acc (aligned)

b/acc (biology)

d/acc (decentralized)

e/acc (effective)

p/acc (punk)

or

h/acc (humaic)

a/acc

‘Aligned Accelerationism’, as suggested by Grimes.

https://twitter.com/Grimezsz/status/1633685857206157313

My caveat here is that humanity has not yet matured to a high enough level to become a worthy role model for birthing infinite intelligence.

Reminds me of this old Monty Python skit:

‘Alignment’ risks embodying the same vices and destructive tendencies… and abject stupidities… that humans exhibit.

This speaks to the ‘will of the universe’ suggested by e/acc as ‘expanding intelligence’.

Marc Andreesen in interview with Lex Friedman rightly highlights the challenge of defining human values given that different people have different values. “Who’s human values would we use for AI alignment?”. Fair question.

And this is rightly where intelligence comes in!

Via compounding collective curation of volitional introspective consciousness.

The axiomatic value of Volitional Introspective Consciousness (VIC) will become crystal clear before we achieve a Kardashev Type I civilization.

That is, to we humans, our own living experience as individuals… shared with loved ones… in synergy with community… and celebration as a collective species… holds a higher value status than anything else, including GDP, energy conversion, or AI.

The ascension of VIC is the answer to Vitalik’s question of “what is the thing that we are accelerating towards?”

Once we position VIC (volitional introspective consciousness) as the supreme value of the universe, we will become passionately vigilant about the threat of losing our humanity in giddy pursuit of silicon-based novelty.

b/acc

Biology Accelerationism.

Rather than seeking to escape the capacity of the body that we have, into a heartless silicone-based brain, let’s understand the sanctity of what the universe created in us first.

Your body is where you have the greatest capacity to experience ecstasis, bliss, joy, pleasure, happiness, comfort, connection, and love.

Note Nvidia CEO highlighting that a new mega boom in biotech is coming:

https://twitter.com/BasedBeffJezos/status/1732227475311403345

I’d say that Vitalia City (vitalia.city) and Bryan Johnson (www.bryanjohnson.com) are the best sources of reference and inspiration for b/acc at this time.

p/acc

Punk Accelerationism.

I’ll write more on this in an upcoming Accelerationist Manifesto, but suffice it to say for now, I offered a counterbalance to Solarpunk’s destructive left-leaning tendencies (shown on their Start Here page on Reddit) some time ago with the notion of Superpunk.

Let’s conclude with…

HumAItarianism

HumAItarianism is my proposal:

Not to decelerate progress — we are already flying through the air while building the airplane. We need to maintain full tilt boogy pedal to the metal with fully committed and widely curated collective intelligence…

…And to do so with humanized values front-and-center at all times.

Yes, throw off the yoke of centralized political governance and free society from monopolistic power grabs. It seems to be the only way we can survive and thrive the AI race. On that, I fully agree with Marc Andreesen’s sentiment of accelerationism, and thus e/acc.

Not to decelerate. To accelerate.

But to accelerate in the right direction.

That is, to accelerate into a humaic world.

Get rid of GDP as the bellwether for growth. Yuck.

And dare I say, build a world based on the empathy and joyful buzz of loving kindness.

Humanity über alles.

a/acc + b/acc + d/acc plus the good natured intention of freeing humanity from pessimistic constraint in e/acc = h/acc.

Bravo to Marc Andreesen’s stated e/acc ‘Patron Saints of Techno-Optimism’ for kick-starting a positive revival of Accelerationism: @BasedBeffJezos, @bayeslord, and @PessimistsArc.

Not too scathing I hope? Just keeping the conversation going.

We have a long-way more to go before we really know in our heart-of-hearts what it means to grow.

Subscribe to my wide-ranging ramblings, and watch out for my upcoming book Atomic Cognition.

Seems like the acc's are as varied as religion's and hold the same dangers and opportunities for growth religion has had for 2500 years, and even though religion was and is self destructive, it never carried the power to harm us on the scale technology has and will continue to acquire for the foreseeable future. Thank you for sharing your time and work Gavriel, peace